More than 600 technical communicators met for the annual STC Summit in Phoenix, AZ, to demonstrate and expand the many ways in which they add value for users, clients, and employers. It almost sounds like a non-theme for a conference, but that was a common impression I took away time and again, prompted by my personal selection of sessions, no doubt!

This thrust is actually very much in line with the STC’s revamped mission statement (scroll down a bit) which includes these objectives:

- [Support] technical communication professionals to succeed in today’s workforce and to grow into related career fields

- Define and publicize the economic contribution of technical communication practices […]

- Technical communication training fosters in practitioners habits […] that underpin their ability to successfully perform in many fields

I’ll describe my personal Summit highlights and insights that resonated with me. For the mother of all STC Summit blogging, visit Sarah Maddox’ blog with a summary post which links to posts about no fewer than 10 individual sessions!

Connecting across silos with diverse skills

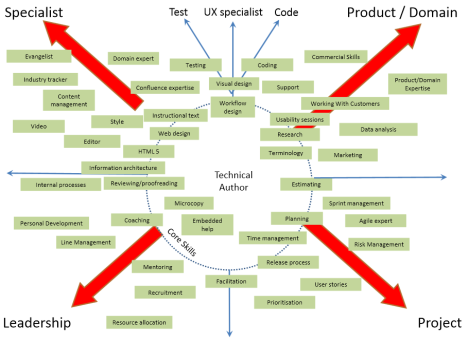

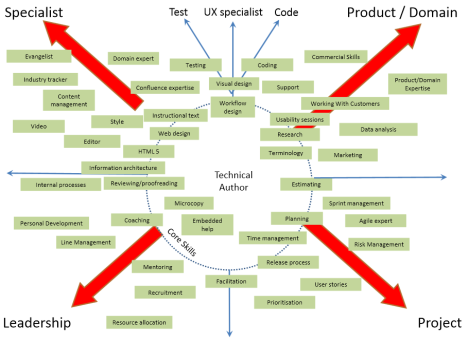

A good illustration for how easily tech comm skills and tasks connect with and seep into other job roles is Red Gate Software’s Technical Communication Skills Map. It appeared at least twice at the STC Summit: In STC CEO Chris Lyons’ opening remarks and again in Ben Woelk’s lightning talk.

Depending on a tech comm’ers talents and tasks, he can collaborate closely with – or develop into – a product manager or project manager, a UX specialist or tester.

Standing united against “wicked ambiguity”

Jonathon Colman, content strategist at Facebook, took a very high-level view of our profession’s challenges in his keynote address on Sunday evening. Technical communication that travels millions of miles on NASA’s Voyager or that must last thousands of years unites us against ambiguity – regardless of our different skills and various everyday tasks.

Such ambiguity can become “wicked” in fields such as urban planning and climate change, because it makes the issue to be described hard to define and hard to fix with limited time and resources. The solutions, such as they exist, are expensive and hard to scope and to test. Still we must at least attempt to describe a solution, for example, for nuclear waste: Tech comm must warn people to avoid any contact in a message that is recognizable and comprehensible for at least 10,000 years.

Jonathon ended on the hopeful note that tech comm’ers can acknowledge wicked ambiguity, unite against it, race towards it, embrace it – and try the best we can.

Wielding the informal power of influence

Skills are not always enough to connect us tech comm’ers successfully with other teams and departments. Sometimes, adverse objectives or incentives get in the way. Then we need to wield the informal power of influence. Kevin Lim from Google showed us how with witty, dry understatement – a poignant exercise of persuasion without resorting to rhetorical pyrotechnics.

Influence, Kevin explained, is the “dark matter of project management” that allows us to gain cooperation with others. We can acquire this informal power by authentically engaging colleagues with our skills and practices. (The “authenticity” is important to distinguish influence from sheer manipulation.) To optimize our chances for successful influence, we need to align our engagement with the company culture, a corporate strategy, and the objectives of key people, esp. project managers and our boss.

Put everything under a common goal and engage: “Don’t have lunch by yourself. Bad writer, bad!” – appropriate advice for the Summit, too!

– Watch this space for more STC14 coverage coming soon!

Filed under: conferences, technical communication | Tagged: Jonathon Colman, Kevin Lim, STC, STC Summit 2014 | 2 Comments »